Google is making waves with Gemini, its flagship suite of generative AI models, applications, and services. But what exactly is Gemini? How can you use it? And how does it compare to other generative AI tools like OpenAI’s ChatGPT, Meta’s Llama, and Microsoft’s Copilot?

To help you stay up to date with Gemini’s latest developments, we’ve created this comprehensive guide. We’ll continue updating it as Google releases new Gemini models, features, and announcements.

What is Gemini?

Gemini is Google’s next-generation family of generative AI models, developed by its AI research divisions, DeepMind and Google Research. It comes in four variations:

Gemini Ultra – The largest and most powerful model.

Gemini Pro – A slightly smaller but still robust model. The latest version, Gemini 2.0 Pro Experimental, serves as Google’s flagship.

Gemini Flash – A streamlined, faster version of Pro. It also includes:

Gemini Flash-Lite, a more compact and speed-optimized variant.

Gemini Flash Thinking Experimental, designed with enhanced reasoning capabilities.

Gemini Nano – A lightweight model available in two versions: Nano-1 and the more capable Nano-2, which can operate offline.

All Gemini models are designed to be natively multimodal, meaning they can process and analyze more than just text. Google states that these models were pre-trained and fine-tuned on a diverse dataset, including public, proprietary, and licensed audio, images, videos, code, and multilingual text.

This multimodal capability sets Gemini apart from models like Google’s earlier LaMDA, which was trained exclusively on text and lacks the ability to process non-text inputs.

However, ethical and legal concerns persist regarding AI models trained on public data, sometimes without explicit consent from data owners. While Google offers an AI indemnification policy to protect certain Google Cloud customers from potential lawsuits, this policy has limitations. Businesses considering Gemini for commercial use should proceed with caution.

What’s the Difference Between Gemini Apps and Gemini Models?

Gemini apps serve as user-friendly interfaces that connect to various Gemini models, functioning similarly to chatbots. They act as front-end platforms for Google’s generative AI, much like OpenAI’s ChatGPT or Anthropic’s Claude apps.

Gemini on the Web and Mobile

Gemini is accessible on the web, while on mobile, its availability varies by platform. On Android, the Gemini app has replaced Google Assistant, whereas on iOS, it operates through the Google and Google Search apps.

A recent update on Android allows users to overlay Gemini on any app, enabling contextual interactions, such as asking questions about on-screen content (e.g., a YouTube video). This feature is activated by pressing and holding the power button on supported devices or by saying, “Hey Google.”

Gemini supports multiple input formats, including images, voice commands, and text. It can also process files like PDFs and, soon, videos—whether uploaded directly or imported from Google Drive. Conversations remain synchronized across platforms when signed in with the same Google Account.

Gemini Advanced and AI Integration

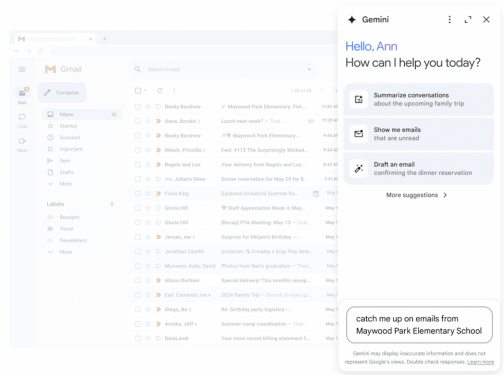

Beyond standalone apps, Gemini’s capabilities are gradually being integrated into core Google services such as Gmail and Google Docs.

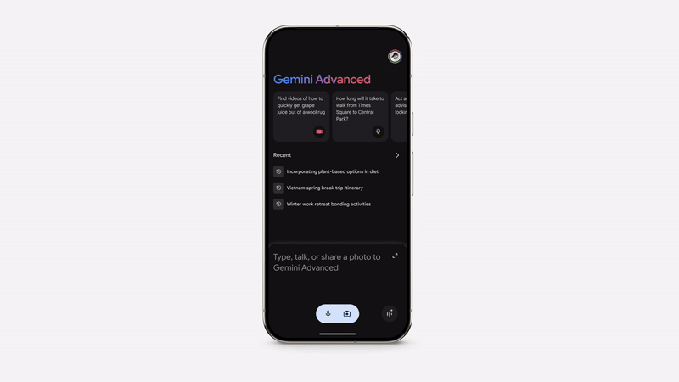

To unlock most AI-powered features, users need the Google One AI Premium Plan, a $20 monthly subscription that extends Gemini’s functionality across Google Workspace apps like Docs, Maps, Slides, Sheets, Drive, and Meet. This plan also grants access to Gemini Advanced, which offers more sophisticated AI models within the Gemini ecosystem.

Subscribers to Gemini Advanced receive additional benefits, including early access to new features, the ability to run and edit Python code within Gemini, and an expanded context window. While the standard Gemini app can analyze up to 24,000 words (approximately 48 pages), Gemini Advanced significantly increases this capacity to approximately 750,000 words (or 1,500 pages), enabling deeper reasoning and memory retention.

Gemini Advanced: A Comprehensive AI Experience

Gemini Advanced provides users with access to Google’s Deep Research feature, leveraging advanced reasoning and long-context capabilities to generate in-depth research briefs. Upon receiving a prompt, the chatbot formulates a multi-step research plan for user approval. Once approved, Gemini searches the web and compiles a detailed report, designed to address complex inquiries such as, “Can you help me redesign my kitchen?”

Additionally, Gemini Advanced includes a memory feature, enabling the chatbot to reference previous conversations for contextual continuity. Subscribers also benefit from expanded access to NotebookLM, Google’s AI-powered tool that converts PDFs into audio-based summaries.

Another exclusive feature is early access to Google’s experimental Gemini 2.0 Pro, an advanced AI model optimized for complex coding and mathematical problem-solving.

Seamless Trip Planning and Integration Across Google Services

Gemini Advanced also enhances travel planning within Google Search, creating personalized itineraries based on user preferences. It factors in flight details (from Gmail), dining preferences, and insights from Google Search and Maps, automatically updating itineraries to reflect any changes.

For business users, Gemini is available through two enterprise plans: Gemini Business and Gemini Enterprise. Gemini Business starts at $6 per user per month, while Gemini Enterprise offers additional features such as AI-powered meeting notes, translated captions, and document classification, with pricing tailored to a company’s specific needs. (Both plans require an annual commitment.)

AI-Powered Assistance in Gmail, Docs, Chrome, and More

Gemini integrates seamlessly across Google Workspace. In Gmail, it assists with email drafting and summarization. In Docs, it supports content creation, editing, and brainstorming. In Slides, it generates both presentations and custom images, while in Sheets, it organizes data with tables and formulas.

Recent enhancements have also brought Gemini to Google Maps, where it summarizes reviews and offers recommendations for exploring new cities. In Drive, it streamlines workflow by summarizing files and folders, while in Meet, it provides multilingual caption translations.

With Gemini Advanced, Google continues to push the boundaries of AI-powered productivity, offering users an intuitive and dynamic toolset across its ecosystem.

Google has integrated Gemini into its Chrome browser as an AI-powered writing tool. Users can generate entirely new content or refine existing text, with Gemini providing recommendations based on the web page they are viewing.

Beyond Chrome, Gemini is embedded across various Google products, including its database services, cloud security solutions, and app development platforms like Firebase and Project IDX. It also enhances applications such as Google Photos, where it enables natural language search, YouTube, where it assists in brainstorming video ideas, and NotebookLM, Google’s AI-driven note-taking assistant.

In software development, Gemini powers Code Assist (formerly Duet AI for Developers), a suite of AI-driven tools designed for code generation and completion. It also strengthens Google’s security offerings, such as Gemini in Threat Intelligence, which analyzes extensive codebases to detect potential threats and allows users to conduct natural language searches for security risks or indicators of compromise.

Gemini Extensions and Custom Chatbots (Gems)

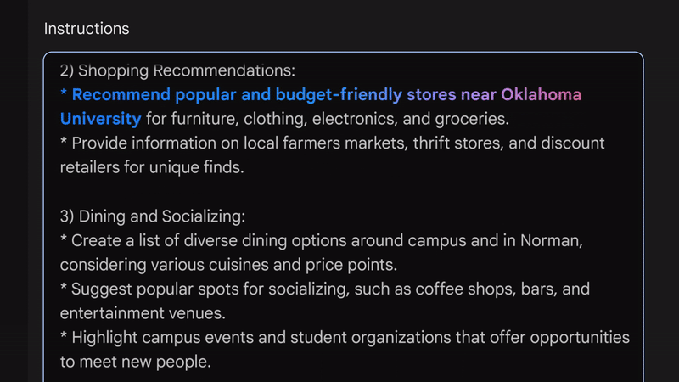

At Google I/O 2024, the company introduced Gems, a feature within Gemini Advanced that enables users to create custom AI chatbots. These chatbots can be tailored through natural language prompts—for instance, “You’re my running coach. Provide a daily running plan”—and can be shared publicly or kept private.

Gems are accessible on desktop and mobile in 150 countries and support most languages. In the future, they will integrate with additional Google services, including Google Calendar, Tasks, Keep, and YouTube Music, to execute personalized tasks efficiently.

Gemini Integrations and Live Voice Chats

Expanding Google Service Integrations

The Gemini apps on both web and mobile can seamlessly integrate with Google services through what Google refers to as “Gemini extensions.” Currently, Gemini connects with Google Drive, Gmail, and YouTube, enabling users to perform tasks like summarizing recent emails with queries such as, “Could you summarize my last three emails?”

Later this year, Gemini’s capabilities will expand to include Google Calendar, Keep, Tasks, and YouTube Music. Additionally, it will integrate with Android-exclusive utilities that control on-device features such as timers, alarms, media playback, flashlight, volume, Wi-Fi, and Bluetooth.

Gemini Live: Interactive Voice Conversations

A feature called Gemini Live allows users to engage in real-time, in-depth voice conversations with the AI. Available on mobile Gemini apps and the Pixel Buds Pro 2, this feature remains accessible even when the phone is locked.

With Gemini Live, users can interrupt the chatbot mid-response to ask clarifying questions. The AI dynamically adapts to the user’s speech patterns in real time, creating a more natural interaction. In the future, Gemini is expected to incorporate visual understanding, enabling it to process and respond to images and videos captured via smartphone cameras.

Live is also designed to function as a virtual coach, assisting users in rehearsing for events, brainstorming ideas, and more. For example, it can recommend key skills to emphasize during job or internship interviews and provide guidance on public speaking.

You can check out our review of Gemini Live here. Spoiler alert: While the feature shows promise, it still has room for improvement before becoming truly valuable—but it’s still in its early stages.

Image Generation with Imagen 3

Gemini users can create artwork and images using Google’s integrated Imagen 3 model.

According to Google, Imagen 3 offers a more precise interpretation of text prompts compared to its predecessor, Imagen 2. The model is also described as more creative and detailed in its outputs while minimizing artifacts and visual errors. Google claims that Imagen 3 is its most advanced version yet, particularly in rendering text accurately.

Google’s Gemini Updates: Image Generation, Teen-Focused Features, and Smart Home Integration

Restoring People Image Generation

In February 2024, Google temporarily halted Gemini’s ability to generate images of people following user complaints about historical inaccuracies. However, in August, the company reintroduced this feature for select users—specifically those subscribed to Google’s paid Gemini plans, such as Gemini Advanced. This rollout is part of a pilot program available to English-language users.

Gemini for Teens

In June, Google launched a version of Gemini tailored for teenagers, enabling students to access the AI through their Google Workspace for Education school accounts.

This teen-focused experience includes additional policies and safeguards, such as a customized onboarding process and an AI literacy guide designed to help young users engage with AI responsibly. Despite these enhancements, it remains largely similar to the standard Gemini experience, including the “double check” feature, which verifies responses by cross-referencing information from the web.

Gemini in Smart Home Devices

An increasing number of Google devices now leverage Gemini for enhanced functionality. This includes the Google TV Streamer, the Pixel 9 and 9 Pro, and the latest Nest Learning Thermostat.

On the Google TV Streamer, Gemini personalizes content recommendations based on user preferences, summarizes reviews, and even condenses entire TV seasons into brief overviews.

The latest Nest thermostat, along with Nest speakers, cameras, and smart displays, will soon benefit from Gemini’s enhanced conversational and analytical capabilities within Google Assistant.

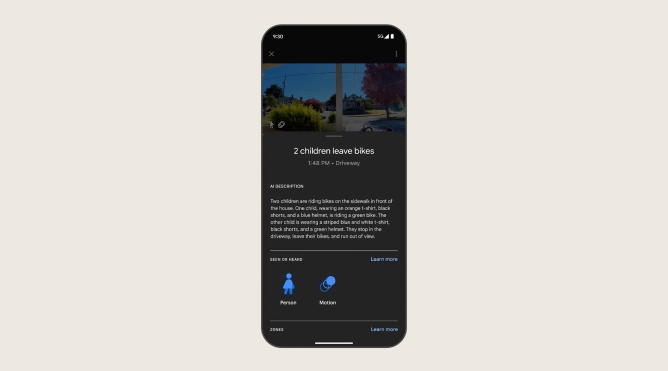

Later this year, subscribers to Google’s Nest Aware plan will gain early access to new Gemini-powered features, including AI-generated descriptions for Nest camera footage, natural language video search, and intelligent automation suggestions. Nest cameras will be able to interpret real-time video feeds—such as detecting when a dog is digging in the garden—while the Google Home app will allow users to retrieve relevant footage and set up automated actions based on descriptions. For example, users can ask, “Did the kids leave their bikes in the driveway?” or automate their Nest thermostat to activate heating every Tuesday when they arrive home from work.

Gemini will soon be able to summarize security camera footage from Nest devices.

Image Credits:Google

Google Assistant Upgrades and the Capabilities of Gemini Models

Later this year, Google Assistant will receive upgrades on Nest-branded and other smart home devices to enhance conversational fluidity. These updates include improved voice quality and the ability to ask follow-up questions more naturally.

What Can Gemini Models Do?

As multimodal AI systems, Gemini models handle diverse tasks, including speech transcription and real-time image and video captioning. Many of these features are already integrated into products, with more enhancements expected soon.

However, Google’s credibility in this space has been questioned. The underwhelming launch of Bard and a misleading promotional video for Gemini have raised concerns. Additionally, like its competitors, Google has yet to resolve fundamental AI issues such as bias and hallucination.

Assuming Google’s claims hold true, here’s a breakdown of what different Gemini tiers offer now and what they aim to achieve:

Gemini Ultra: Advanced AI for Complex Tasks

Gemini Ultra is designed for advanced problem-solving, such as assisting with physics homework, identifying errors, and analyzing scientific papers. The model can extract insights from multiple sources and update charts with real-time data.

While Gemini Ultra technically supports image generation, this feature has yet to be fully integrated. Unlike other AI models that rely on separate image generators (e.g., DALL-E 3 in ChatGPT), Gemini Ultra generates images natively.

Currently, Ultra is available via Google’s Vertex AI platform and AI Studio for developers. However, its presence in consumer-facing products remains limited.

Gemini Pro: Powerful and Customizable AI

The latest iteration, Gemini 2.0 Pro, boasts superior performance in coding, reasoning, math, and factual accuracy. It can process up to 1.4 million words, two hours of video, or 22 hours of audio and provide insights based on that data.

Developers can fine-tune Gemini Pro for specific applications using Vertex AI. It can source data from providers like Moody’s, Thomson Reuters, and corporate datasets, integrating seamlessly with third-party APIs for automated workflows.

AI Studio offers customization tools for structured chat prompts, allowing developers to refine the model’s tone, style, and safety settings. Meanwhile, Vertex AI Agent Builder enables the creation of AI-driven agents that analyze past data to generate consistent new content.

Gemini Flash: Lightweight Yet Capable

Gemini 2.0 Flash is optimized for speed and efficiency, making it ideal for summarization, chat applications, and data extraction. Unlike previous models, it can generate images and audio, interact with external APIs, and leverage tools like Google Search.

In December, Google introduced a “thinking” version of Flash, which improves reasoning by taking extra time to validate answers. By February, this feature was integrated into the Gemini app, alongside a Flash-Lite version—offering improved performance without increased costs.

Developers can enhance Flash and Pro with context caching, which allows them to store large datasets for quick and cost-effective retrieval, though this incurs an additional fee.

Gemini Nano: AI on Your Phone

Designed for mobile efficiency, Gemini Nano runs directly on select devices, including the Pixel 8 Pro, Pixel 9, and Samsung Galaxy S24. It powers features like Summarize in Recorder and Smart Reply in Gboard, processing data locally for enhanced privacy.

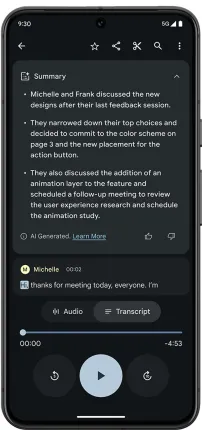

The Recorder app transcribes and summarizes conversations without requiring an internet connection, ensuring user data remains on-device.

Gemini Nano and Its Applications

Gemini Nano is integrated into Gboard, Google’s keyboard replacement, where it powers the Smart Reply feature. This functionality suggests responses during conversations in messaging apps like WhatsApp, streamlining communication.

In the Google Messages app on supported devices, Nano enables Magic Compose, a tool that can craft messages in various tones, such as “excited,” “formal,” or “lyrical.”

Google has also announced plans for Nano’s future applications. A forthcoming Android update will leverage Nano to detect potential scams during phone calls. Additionally, the new weather app on Pixel phones utilizes Gemini Nano to generate personalized weather reports. Nano also enhances TalkBack, Google’s accessibility service, by providing aural descriptions of objects for users with low vision or blindness.

Gemini Model Pricing

Gemini models, including 1.5 Pro, 1.5 Flash, 2.0 Flash, and 2.0 Flash-Lite, are available through Google’s Gemini API for app and service development. Free tiers are offered, though they come with usage limits and exclude features like context caching and batching.

For paid usage, pricing follows a pay-as-you-go structure:

Gemini 1.5 Pro

- $1.25 per 1 million input tokens (for prompts up to 128K tokens)

- $2.50 per 1 million input tokens (for prompts exceeding 128K tokens)

- $5.00 per 1 million output tokens (up to 128K tokens)

- $10.00 per 1 million output tokens (above 128K tokens)

Gemini 1.5 Flash

- $0.075 per 1 million input tokens (up to 128K tokens)

- $0.15 per 1 million input tokens (above 128K tokens)

- $0.30 per 1 million output tokens (up to 128K tokens)

- $0.60 per 1 million output tokens (above 128K tokens)

Gemini 2.0 Flash

- $0.10 per 1 million input tokens

- $0.40 per 1 million output tokens

- $0.70 per 1 million input tokens (for audio processing)

- $0.40 per 1 million output tokens (for audio processing)

Gemini 2.0 Flash-Lite

- $0.075 per 1 million input tokens

- $0.30 per 1 million output tokens

Note: Tokens are small units of raw data. For example, the word “fantastic” is broken down into the syllables “fan,” “tas,” and “tic.” One million tokens equates to approximately 700,000 words. Input tokens refer to data fed into the model, while output tokens refer to data generated by the model.

Pricing for Gemini 2.0 Pro has yet to be announced, and Gemini Nano remains in early access.

Updates on Project Astra

Project Astra is Google DeepMind’s initiative to develop AI-powered applications and agents capable of real-time, multimodal understanding. In recent demonstrations, the AI has shown its ability to process live video and audio simultaneously.

In December, Google released a test version of Project Astra as an app for a small group of trusted testers. However, there are no immediate plans for a broader rollout.

Google has also explored integrating Astra into smart glasses. A prototype featuring Project Astra and augmented reality (AR) capabilities was provided to select testers in December. However, there is no finalized product or clear timeline for a potential release.

At this stage, Project Astra remains a research initiative rather than a commercial product, but it offers a glimpse into Google’s AI ambitions for the future.

Is Gemini Coming to the iPhone?

Apple has confirmed discussions about integrating Gemini and other third-party AI models into its Apple Intelligence suite. Following the WWDC 2024 keynote, Apple’s Senior Vice President Craig Federighi acknowledged plans to collaborate with Gemini but provided no further details.